One of the problems in distributed neural networks is managing the flow of data between nodes and balancing compute intensive portions of the feedforward and back propagation mechanisms.

This can be done in a variety of methods, including ensemble methods, where each compute node contains a smaller group of neural networks, rather than performing as a single hidden node calculating the sigmiod function.

I am studying the effective distribution of work within a DNN and the effects on network accuracy. These results are used primarily in classification and pattern matching applications, although I am also working on using DNN’s for statistical interpolation and extrapolation.

I have been training and testing my Network on a variety of data from the Machine Learning Repository at the University of California, Irvine.

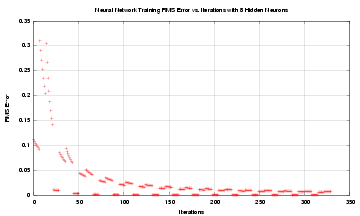

Below we see the RMS error rate for 8 distributed hidden nodes.

The serial version of the code can be found Here.

I have used the following data sets to train and test my network:

* NOAA El Nino from UCI http://archive.ics.uci.edu/ml/datasets/El+Nino

* Black Scholes http://www.scientific-consultants.com/nnbd.html

* Housing data http://lib.stat.cmu.edu/datasets/boston

* NASA Shuttle data http://archive.ics.uci.edu/ml/datasets/Statlog+%28Shuttle%29